March 21st, 2017

IDAH is pleased to host our Annual Workshop and Lecture with Tanya Clement, Assistant Professor, School of Information at the University of Texas Austin.

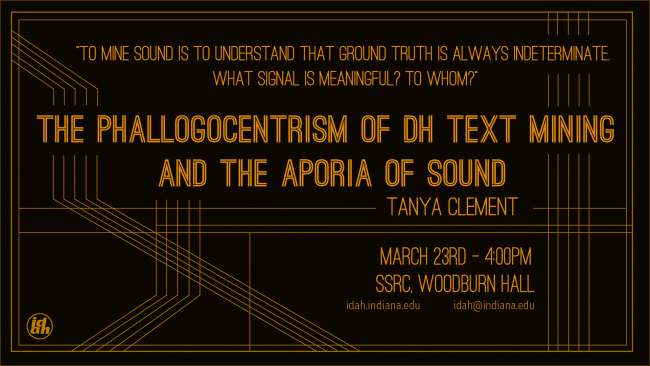

Clement's lecture on March 23, "The Phallogocentrism of DH Text Mining and the Aporia of Sound," will focus on critical questions that emerge when creating digital infrastructure for audio analysis. Whose ear are we modeling in analysis? What is audible and to whom? The workshop on March 24, "Audio Analysis for Poetry, Oratory, and Radio Collections," is a methods session in audio analysis software. Please see below for further descriptions of both the lecture and workshop.

Clement has published widely on her work, with particularly recent and compelling articles in Digital Humanities Quarterly ("Towards a Rationale of Audio-Text") and Journal of Cultural Analytics ("Measured Applause: Toward a Cultural Analysis of Audio Collections") that address the themes of her lecture and workshop.

LECTURE: “The Phallogocentrism of DH Text Mining and the Aporia of Sound”

March 23, 4pm, Social Sciences Research Commons

Description: The practice of text mining in digital humanities is phallogocentric. Text mining, a particular kind of data mining in which predictive methods are deployed for pattern discovery in texts is primarily focused on pre-assumed meanings of The Word. In order to determine whether or not the machine has found patterns in text mining, we begin with a “ground truth” or labels that signify the presence of meaning. This work typically presupposes a binary logic between lack and excess (Derrida, Dissemination, 1981). There is meaning in the results or there is not. Sound, in contrast, is aporetic. To mine sound is to understand that ground truth is always indeterminate. Humanists have few opportunities to use advanced technologies for analyzing sound archives, however. This talk describes the HiPSTAS (High Performance Sound Technologies for Access and Scholarship) Project, which is developing a research environment for humanists that uses machine learning and visualization to automate processes for analyzing sound collections. HiPSTAS engages digital literacy head on in order to invite humanists into concerns about machine learning and sound studies. Hearing sound as digital audio means choosing filter banks, sampling rates, and compression scenarios that mimic the human ear.

Unless humanists know more about digital audio analysis, how can we ask, whose ear we are modeling in analysis? What is audible, to whom? Without knowing about playback parameters, how can we ask, what signal is noise? What signal is meaningful? To whom? Clement concludes with a brief discussion about some observations on the efficacy of using machine learning to facilitate generating data about spoken-word sound collections in the humanities.

WORKSHOP: “Audio Analysis for Poetry, Oratory, and Radio Collections”

March 24, 10a-12p, room w144, Learning Commons, Herman B Wells Library

Description: This beginner’s audio analysis workshop is part of the HiPSTAS (High Performance Sound Technologies for Access and Scholarship) project. We will introduce participants to essential issues that DH scholars, who are often more familiar with working with text, must face in understanding the nature of audio texts such as poetry readings, oral histories, speeches, and radio programs. Understanding what users of sound collections want to do and what kinds of research questions are viable in the context of audio analysis is only a first step. We will also introduce participants to techniques in advanced computational analysis such as annotation, classification, and visualization that are essential to machine learning workflows, using tools such as Sonic Visualiser, ARLO, and pyAudioAnalysis. In the workshop, we will walk through a sample workflow for audio machine learning. This workflow includes developing a tractable machine-learning problem, creating and labeling audio segments, running machine learning queries, and validating results. As a result of the workshop, participants will be able to consider potential use cases for which they might use advanced technologies to augment their research on sound, and, in the process, they will also be introduced to the possibilities of sharing workflows for enabling such scholarship with archival sound recordings at their home institutions. The datasets and tools with which they will work will be free software available for continued study after the workshop.

This workshop is open to any scholars who are interested in learning to use machine learning and visualization to analyze audio files of interest to the humanities such as poetry readings, oral histories, speeches, and radio programs. There are no prerequisites but familiarity with an audio collection of interest is useful to help ground participants in their own use cases.

Participants are expected to bring bring a laptop (any operating system) as well as headphones or earbuds. All tools used in this workshop are open source or free software. An audio corpus will be provided for the hands-on demo. Programs will need to be downloaded in advance of the workshop. Program downloads and instructions here: https://github.com/hipstas/audio-ml-introduction/blob/master/README.md